Hello everyone!

I would like to propose a LIP for the roadmap objective “Define Lisk Mainnet migration”. The LIP describes the process of switching from Lisk Core 3 to Lisk Core 4, including the snapshot block generation, snapshot block processing, the bootstrap period and the network behavior.

I’m looking forward to your feedback.

Here is a complete LIP draft:

LIP: <LIP number>

Title: Define Mainnet Configuration and Migration for Lisk Core 4

Author: Andreas Kendziorra <andreas.kendziorra@lightcurve.io>

Type: Standards Track

Created: <YYYY-MM-DD>

Updated: <YYYY-MM-DD>

Requires: 0060

Abstract

This proposal defines the configuration of Lisk Core 4, including the selection of modules and the choice of some configurable constants. Moreover, it defines the migration process from Lisk Core 3 to Lisk Core 4. As for the previous hard fork, a snapshot block is used that contains a snapshot of the state. This snapshot block is then treated like a genesis block by Lisk Core 4 nodes. In contrast to the previous hard fork, the existing block and transaction history is not discarded.

Copyright

This LIP is licensed under the Creative Commons Zero 1.0 Universal.

Motivation

This LIP is motivated by two points. The first is that the Lisk SDK 6 introduces several new configurable settings. This includes the set of existing modules that can or even must be registered. But also some new constants that must be specified for each chain. Thus, these configurations must be specified for Lisk Core 4 as well which shall be done within this LIP.

The second point is the migration from Lisk Core 3 to Lisk Core 4, for which a process must be defined. This shall also be done within this LIP.

Specification

Constants

The following table defines some constants that will be used in the remainder of this document.

| Name | Type | Value | Description |

DUMMY_PROOF_OF_POSSESSION

|

bytes | byte string of length 96, each byte set to zero | A dummy value for a proof of possession. The DPoS module requires that each delegate account contained in a snapshot/genesis block has such an entry. However, the proofs of possession are not verified for this particular snapshot block.

This value will be used for every delegate account in the snapshot block. |

TOKEN_ID_LSK_MAINCHAIN

|

object |

{"chainID": 0, "localID": 0}

|

Token ID of the LSK token on mainchain. |

LOCAL_ID_LSK

|

uint32 | 0 | The local ID of the LSK token on mainchain. |

MODULE_ID_DPOS

|

uint32 | TBD | Module ID of the DPoS Module |

MODULE_ID_AUTH

|

uint32 | TBD | Module ID of the Auth Module |

MODULE_ID_TOKEN

|

uint32 | TBD | Module ID of the Token Module |

MODULE_ID_LEGACY

|

uint32 | TBD | Module ID of the Legacy Module. |

INVALID_ED25519_KEY

|

bytes |

|

An Ed25519 public key for which the signature validation always fails. This value is used for the generatorPublicKey property of validators in the snapshot block for which no public key is contained within the history since the last snapshot block.

|

DPOS_INIT_ROUNDS

|

uint32 | 60,480 | The number of rounds for the bootstrap period following the snapshot block. This number corresponds to one week assuming no missed blocks. |

HEIGHT_SNAPSHOT

|

uint32 | TBD | The height of the block from which a state snapshot is taken. This block must be an end of a round block. The snapshot block has then the height HEIGHT_SNAPSHOT +1.

|

HEIGHT_PREVIOUS_SNAPSHOT_BLOCK

|

uint32 | 16,270,293 | The height of the snapshot block used for the migration from Lisk Core 2 to Lisk Core 3. |

SNAPSHOT_TIME_GAP

|

uint32 | TBD | The number of seconds between the block at height HEIGHT_SNAPSHOT and the snapshot block.

|

SNAPSHOT_BLOCK_VERSION

|

uint32 | 0 | The block version of the snapshot block. |

Mainnet Configuration

Modules

Lisk Core 4 will use the following modules:

Constants

Some new LIPs introduced configurable constants. The values for those constants are specified in the following table.

| Module/LIP | Constant Name | Value |

|---|---|---|

| BFT Module | LSK_BFT_BATCH_SIZE |

103 |

| LIP 0055 | MAX_TRANSACTIONS_SIZE_BYTES |

15,360 |

| LIP 0055 | MAX_ASSET_DATA_SIZE_BYTES |

18 (the Reward Module is only module adding an entry to the assets property whereby the size of the data property will not exceed 18 bytes) |

| DPoS Module | FACTOR_SELF_VOTES |

10 |

| DPoS Module | MAX_LENGTH_NAME |

20 |

| DPoS Module | MAX_NUMBER_SENT_VOTES |

10 |

| DPoS Module | MAX_NUMBER_PENDING_UNLOCKS |

20 |

| DPoS Module | FAIL_SAFE_MISSED_BLOCKS |

50 |

| DPoS Module | FAIL_SAFE_INACTIVE_WINDOW |

260,000 |

| DPoS Module | PUNISHMENT_WINDOW |

780,000 |

| DPoS Module | ROUND_LENGTH |

103 |

| DPoS Module | BFT_THRESHOLD |

68 |

| DPoS Module | MIN_WEIGHT_STANDBY |

1000 x (10^8) |

| DPoS Module | NUMBER_ACTIVE_DELEGATES |

101 |

| DPoS Module | NUMBER_STANDBY_DELEGATES |

2 |

| DPoS Module | TOKEN_ID_DPOS |

TOKEN_ID_LSK = {"chainID": 0, "localID": 0} |

| DPoS Module | DELEGATE_REGISTRATION_FEE |

10 x (10^8) |

| Random Module | MAX_LENGTH_VALIDATOR_REVEALS |

206 |

| Fee Module | MIN_FEE_PER_BYTE |

1000 |

| Fee Module | TOKEN_ID_FEE |

TOKEN_ID_LSK = { "chainID": 0, "localID": 0} |

Mainnet Migration

Process

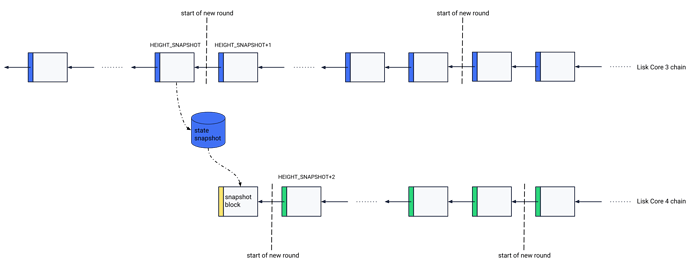

Figure 1: Overview of the migration process. Elements in blue are created by Lisk Core 3, including the state snapshot. Elements in yellow - the snapshot block - are created by the migrator tool. Elements in green are created by Lisk Core 4.

The migration from Lisk Core 3 to Lisk Core 4, also depicted in Figure 1, is performed by the following steps:

- Nodes run Lisk Core 3, where the following steps are done:

- After a block at height

HEIGHT_SNAPSHOTwas processed, a snapshot of the state is performed, which we denote bySTATE_SNAPSHOT. If this block is reverted and a new block for this height is processed, then alsoSTATE_SNAPSHOTneeds to be computed again. - Nodes continue processing blocks until the block at height

HEIGHT_SNAPSHOTis final. - Once the block at height

HEIGHT_SNAPSHOTis final, nodes can stop forging and processing new blocks. Finalized blocks with a height larger than or equal toHEIGHT_SNAPSHOT+1are discarded.

- After a block at height

- Nodes compute a snapshot block as defined below using a migrator tool and persist this one locally.

- Nodes start to use Lisk Core 4, where the steps described in the section Starting Lisk Core 4 are executed.

- Once the timeslot of the snapshot block at height

HEIGHT_SNAPSHOT+1is passed, the first round following the new protocol starts.

Starting Lisk Core 4

When Lisk Core 4 is started for the first time, the following steps are performed:

- Get the snapshot block (the one for height

HEIGHT_SNAPSHOT+1):- Check if the snapshot block for height

HEIGHT_SNAPSHOT+1exists locally. If yes, fetch this block. If not, stop here.

- Check if the snapshot block for height

- Process the snapshot block as described in LIP 0060.

- Check if a database from Lisk Core 3 containing all blocks between heights

HEIGHT_PREVIOUS_SNAPSHOT_BLOCKandHEIGHT_SNAPSHOT(inclusive) can be found locally. If yes:- Fetch these blocks from highest height to lowest. Each block is validated using minimal validation steps as defined below. If this validation step passes, the block and its transactions are persisted in the database.

- Skip steps 4 and 5.

- Fetch all blocks between heights

HEIGHT_PREVIOUS_SNAPSHOT_BLOCK+1andHEIGHT_SNAPSHOT(inclusive) via peer-to-peer from highest height to lowest. Each block is validated using minimal validation steps as defined below. If this validation step passes, the block and its transactions are persisted in the database. - The snapshot block for the height

HEIGHT_PREVIOUS_SNAPSHOT_BLOCKis downloaded from a server. The URL for the source can be configured in Lisk Core 4+. When downloaded, it is validated using minimal validation steps as defined below. If this validation step passes, the block is persisted in the database.

Due to step 1.i, it is a requirement to run Lisk Core 3 and the migrator tool before running Lisk Core 4. However, nodes starting some time after the migration may fetch the snapshot block and its preceding blocks without running Lisk Core 3 and migrator tool before, as described in the following subsection.

Fetching Block History and Snapshot Block without Running Lisk Core 3

Once the snapshot block at height HEIGHT_SNAPSHOT+1 is final, a new version of Lisk Core 4 can be released that has the block ID of this snapshot block hard-coded. In the following, we denote this version by Lisk Core 4+. When a Lisk Core 4+ starts for the first time, the same steps as described above are executed, except that step 1 is replaced by the following:

- Get the snapshot block (the one for height

HEIGHT_SNAPSHOT+1):- Check if the snapshot block for height

HEIGHT_SNAPSHOT+1exists locally. If yes:- Fetch this block.

- Verify that the block ID of this block is matching with the hard-coded block ID of the snapshot block. If yes, skip step b. If not, continue with step ii.

- The snapshot block for height

HEIGHT_SNAPSHOT+1is downloaded from a server. The URL for the source can be configured in Lisk Core 4+. When downloaded, it must first be verified that the block ID of this block is matching with the hard-coded block ID of the snapshot block. If not, stop here (the process should be repeated, but the node operator should specify a new server to download the snapshot block from).

- Check if the snapshot block for height

Note that once the snapshot block for height HEIGHT_SNAPSHOT+1 is processed, the node should start its regular block synchronization, i.e., fetching the blocks with height larger than HEIGHT_SNAPSHOT+1. The steps 3 to 5 from above could run in the background with low priority.

Minimal Validation of Core 3 Blocks

A block created by Lisk Core 3, i.e., a block with a height between HEIGHT_PREVIOUS_SNAPSHOT andHEIGHT_SNAPSHOT (inclusive) is validated as follows:

- Verify that the block follows the

block schemadefined LIP 0029. - Compute the block ID as defined in LIP 0029 and verify that it is equal to the

previousBlockIDproperty of the child block. - Verify that the transactions in the payload yield the transaction root provided in the block header.

If any of the steps fails, the block is invalid.

Snapshot Block

The snapshot block b is constructed in accordance with the definition of genesis block in LIP 0060. The details for the header and assets property are specified in the following subsections.

Header

Let a be the block at height HEIGHT_SNAPSHOT. Then the following points define how b.header is constructed:

b.header.version = SNAPSHOT_BLOCK_VERSIONb.header.timestamp = a.header.timestamp + SNAPSHOT_TIME_GAPb.header.height = HEIGHT_SNAPSHOT+1b.header.previousBlockID = blockID(a)- all other block header properties must be as specified in LIP 0060

Assets

From the registered modules, the following ones add an entry to b.assets:

- Legacy module

- Token module

- Auth module

- DPoS module

How these modules construct their entry for the assets property is defined in the next subsections. The verification of their entries is defined in the Genesis Block Processing sections of the respective module LIPs. Note that once all modules add their entries to b.assets, this array must be sorted by increasing value of moduleID.

In the following, let accountsState be the key-value store of the accounts state of STATE_SNAPSHOT computed as described above. That means, for a byte array addr representing an address, accountsState[addr] is the corresponding account object following the account schema defined in LIP 0030. Moreover, let accounts be the array that contains all values of accountsState for which the key is a 20-byte address sorted in lexicographical order of their address property. Correspondingly, let legacyAccounts be the array that contains all values of accountsState for which the key is an 8-byte address sorted in lexicographical order of their address property.

Assets Entry for Legacy Module

The assets entry for the legacy module is added by the logic defined in the function addLegacyModuleEntry in the following pseudo code:

addLegacyModuleEntry():

legacyObj = {}

legacyObj.accounts = []

for every account in legacyAccounts:

userObj = {}

userObj.address = account.address

userObj.balance = account.token.balance

legacyObj.accounts.append(userObj)

data = serialization of legacyObj using genesisLegacyStoreSchema

append {"moduleID": MODULE_ID_LEGACY, "data": data} to b.assets

Assets Entry for Token Module

The assets entry for the token module is added by the logic defined in the function addTokenModuleEntry in the following pseudo code:

addTokenModuleEntry():

tokenObj = {}

tokenObj.userSubstore = createUserSubstoreArray()

tokenObj.supplySubstore = createSupplySubstoreArray()

tokenObj.escrowSubstore = []

tokenObj.availableLocalIDSubstore = {}

tokenObj.availableLocalIDSubstore.nextAvailableLocalID = LOCAL_ID_LSK + 1

tokenObj.terminatedEscrowSubstore = []

data = serialization of tokenObj using genesisTokenStoreSchema

append {"moduleID": MODULE_ID_TOKEN, "data": data} to b.assets

createUserSubstoreArray():

userSubstore = []

for every account in accounts:

userObj = {}

userObj.address = account.address

userObj.tokenID = TOKEN_ID_LSK_MAINCHAIN

userObj.availableBalance = account.token.balance

userObj.lockedBalances = getLockedBalances(account)

if (userObj.availableBalance == 0 and userObj.lockedBalances == []):

continue

userSubstore.append(userObj)

return userSubstore

getLockedBalances(account):

amount = 0

for vote in account.dpos.sentVotes:

amount += vote.amount

for unlockingObj in account.dpos.unlocking:

amount += unlockingObj.amount

if amount > 0:

return [{"moduleID": MODULE_ID_DPOS, "amount": amount}]

else:

return []

createSupplySubstoreArray():

totalLSKSupply = 0

for every account in accounts:

totalLSKSupply += account.token.balance

lockedBalances = getLockedBalances(account)

if lockedBalances is not empty:

totalLSKSupply += lockedBalances[0].amount

LSKSupply = {"localID": LOCAL_ID_LSK, "totalSupply": totalLSKSupply}

return [LSKSupply]

Assets Entry for Auth Module

The assets entry for the auth module is added by the logic defined in the function addAuthModuleEntry in the following pseudo code:

addAuthModuleEntry():

authDataSubstore = []

for every account in accounts:

authObj = {}

authObj.numberOfSignatures = account.keys.numberOfSignatures

authObj.mandatoryKeys = account.keys.mandatoryKeys

authObj.optionalKeys = account.keys.optionalKeys

authObj.nonce = account.sequence.nonce

entry = {address: account.address, authAccount: authObj}

authDataSubstore.append(entry)

data = serialization of authDataSubstore using genesisAuthStoreSchema

append {"moduleID": MODULE_ID_AUTH, "data": data} to b.assets

Assets Entry for DPoS Module

The assets entry for the DPoS module is added by the logic defined in the function addDPoSModuleEntry in the following pseudo code:

addDPoSModuleEntry():

DPoSObj = {}

DPoSObj.validators = createValidatorsArray()

DPoSObj.voters = createVotersArray()

DPoSObj.snapshots = []

DPoSObj.genesisData = createGenesisDataObj()

data = serialization of DPoSObj using genesisDPoSStoreSchema

append {"moduleID": MODULE_ID_DPOS, "data": data} to b.assets

createValidatorsArray():

validators = []

validatorKeys = getValidatorKeys()

for every account in accounts:

if account.dpos.delegate.username == "":

continue

validator = {}

validator.address = account.address

validator.name = account.dpos.delegate.username

validator.blsKey = INVALID_BLS_KEY

validator.proofOfPossession = DUMMY_PROOF_OF_POSSESSION

if validatorKeys[account.address]:

validator.generatorKey = validatorKeys[account.address]

else:

validator.generatorKey = INVALID_ED25519_KEY

validator.lastGeneratedHeight = account.dpos.delegate.lastForgedHeight

validator.isBanned = account.dpos.delegate.isBanned

validator.pomHeights = account.dpos.delegate.pomHeights

validator.consecutiveMissedBlocks = account.dpos.delegate.consecutiveMissedBlocks

validators.append(validator)

return validators

# This functions gets the public keys of the registered validators,

# i.e., the accounts for which account.dpos.delegate.username is not

# the empty string, from the history of Lisk Core 3 blocks and

# transactions.

getValidatorKeys():

let keys be an empty dictionary

for every block c with height in [HEIGHT_PREVIOUS_SNAPSHOT_BLOCK + 1, HEIGHT_SNAPSHOT]:

address = address(c.generatorPublicKey)

keys[address] = c.generatorPublicKey

for trs in c.transactions:

address = address(trs.senderPublicKey)

if the validator corresponding to address is a registered validator:

keys[address] = trs.senderPublicKey

return keys

createVotersArray():

voters = []

for every account in accounts:

if account.dpos.sentVotes == [] and account.dpos.unlocking == []:

continue

voter = {}

voter.address = account.address

voter.sentVotes = account.dpos.sentVotes

voter.pendingUnlocks = account.dpos.unlocking

voters.append(voter)

return voters

createGenesisDataObj():

genesisDataObj = {}

genesisDataObj.initRounds = DPOS_INIT_ROUNDS

r = round number for the block a

Let topDelegates be the set of the top 101 non-banned delegate accounts by delegate weight at the end of round r-2

initDelegates = [account.address for account in topDelegates]

sort initDelegates in lexicographical order

genesisDataObj.initDelegates = initDelegates

return genesisDataObj

Rationale

Keeping Lisk Core 3 Block History

The decision to discard the block history of Lisk Mainnet at the hard fork from Lisk Core 2 to Lisk Core 3 was considered as rather disadvantageous. One reason is that the transaction history of an account can not be retrieved anymore from a node running Lisk Core 3. Therefore, the block history of blocks created with Lisk Core 3 shall be kept on Lisk Core 4 nodes.

Getting the Lisk Core 3 Block History

In order to keep the implementation of Lisk Core 4 as clean as possible, Lisk Core 4 cannot process Lisk Core 3 blocks, but only ensure their integrity. This is, however, sufficient for maintaining the Lisk Core 3.0 block history and guaranteeing the immutability of this block history.

Once Lisk Core 4 processed the snapshot block, it can fetch the whole Lisk Core 3 block history, where this is done from the highest to the lowest height. This allows that each block can be validated by simply checking if its block ID matches with the previousBlockID property of the child block, and if the payload matches with the transactionRoot property. Thus, almost no protocol rules related to Lisk Core 3 must be implemented which keeps the implementation clean. The Lisk Core 3 history can be fetched from a database created by Lisk Core 3 on the same computer or from the peer-to-peer network. Note that the first Lisk Core 3 block - the snapshot block of the previous migration - poses an exception as this one is either downloaded from a server or fetched from a database created by Lisk Core 3 on the same computer.

Fetching Snapshot Blocks

The snapshot block created for this hard fork as well as for the one used for the previous hard fork are not shared via peer-to-peer due to their large size. Instead, they are either downloaded from a server or must be found locally.

Initial Version of Lisk Core 4

The initial version of Lisk Core 4 can not download the snapshot block of this hard fork from a server because it could not validate the block. Instead, it must find the snapshot block locally on the same computer. This requires that the snapshot block must be created using a migrator tool on the same node, which in turn requires that the node runs the latest patched version of Lisk Core 3 until the block at height HEIGHT_SNAPSHOT is final.

Later Versions of Lisk Core 4

Once the snapshot block is final, a patched version of Lisk Core 4 will be released in which the block ID of the snapshot block is hard coded. This allows to validate a downloaded snapshot block by simply checking its block ID.

Downloading from Server

The URL can be configured. By default, the URL points to a server of the Lisk Foundation. The snapshot block of this hard fork is validated by checking that its block ID matches the hard coded block ID in the patched version of Lisk Core 4. The snapshot block of the previous migration is validated by checking that its block ID matches with the previousBlockID property of its child block (see also here). Hence, there are no security concerns by downloading them from a non-trusted server. Users can nevertheless configure their node to download it from a server of their choice. The snapshot blocks could be provided by any users. This may also be helpful for situations where the Lisk Foundation server should be down.

Assigning Generator Key to Validator Accounts

In the protocol used by Lisk Core 4, each validator needs a generator key in order to generate blocks. Moreover, the DPoS module expects that each validator account has such a key specified in the snapshot block. Otherwise, the snapshot block is invalid (see here). Therefore, it is aimed to use the existing public keys of the validator accounts as the initial generator keys in the snapshot block. However, the public key does not belong to the account state anymore with Lisk Core 3. For this reason, the whole block history from the previous snapshot block onwards is scanned for public keys of registered validators. The found keys are used as generator keys in the snapshot block. Validator accounts for which no public key was found get an “invalid” public key assigned, i.e., one for which every signature validation will fail. The specific value is discussed in the following subsection. Those validators should send an update generator key transaction as soon as possible once Lisk Core 4 is used, ideally within the bootstrap period. Otherwise, those validators can still be active validators, but are not able to generate valid blocks as they cannot create valid block signatures. In the worst case, this may lead to banning which happens after 50 blocks in a row are missed.

To avoid the need to send an updated generator key right after the hard fork, registered validators that did not forge a block and did not send a transaction in the Lisk Core 3 history could simply send a transaction any time before the hard fork. Then the sender public key of this transaction will be set as the generator key as described above. Nevertheless, every validator is encouraged to perform an update generator key with a generator key different from the public key used for transaction signatures eventually. This is due to security benefits added by the concept of generator keys.

Invalid Ed25519 Public Key

The chosen value for the Ed25519 public key for which every signature validation should fail, 0x7fffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff, is the little endian encoding of 2255-1. As described in the first point of the decoding section of RFC 8032, decoding this value fails. This in turn results in signature verification failure as described in the first point of the verification section, regardless of the message and the signature.

Assigning BLS Key to Validator Accounts

In blockchains created with Lisk SDK 6, one needs to register a valid BLS key in order to become a validator. This results in an exception for Lisk Core 4 as the existing validators do not have valid BLS key right after the hard fork. Since the DPoS module expects that each validator account has a BLS key and a proof of possession set in the snapshot block (see here), this will be done by assigning a fixed public key for which signature validation will always fail along with a dummy value for the proof of possession. This proof of possession will never be evaluated as the DPoS and the validator module handle an exception for this particular case (see here and here). Every validator should then register a valid BLS key via a register BLS key transaction, ideally within the bootstrap period described below.

Bootstrap Period

There will be a bootstrap period in which a fixed set of delegates will constitute the set of active delegates. This period will last for one week assuming no missed blocks. This is done by setting the initRounds property in the snapshot block to the corresponding value, which is given by DPOS_INIT_ROUNDS. The reason to have this period is that the DPoS module selects only delegates with a valid BLS key outside of the bootstrap period (there will be an update to LIP 0057 that adapts the delegate selection in this way). Thus, without bootstrap period, the DPoS module could not select any delegates right after the hard fork which would result in a stop of the chain.

The bootstrap period allows each delegate to submit a register BLS key transaction in a sufficiently large time window. The expectation is that most delegates have a valid BLS key after the bootstrap period. In the unexpected case that there are less than 101 non-banned delegates with a BLS key, the DPoS module would simply select a smaller set of delegates that get the block slots of a round assigned round robin.

Backwards Compatibility

This LIP describes the protocol and process for conducting a hardfork on the Lisk mainnet.

Reference Implementation

TBD